Most augmented reality experiences today revolve around overlaying the physical world with known information. Maps and games have garnered much attention in the consumer tech space. In the industrial world, the AR capabilities being leveraged would be constituted as visualize, instruct, or guide. Some examples:

As shown in The State of Industrial Augmented Reality these types of use cases constitute the vast majority.

These AR experiences are triggered by recognition of pre-determined markers, CAD geometries, QR/bar codes or location-based and other sensor inputs. But what if there was a situation where the user was looking at an unidentifiable machine or part, with no context on how to operate or service it?

Artificial intelligence – and especially deep learning – ushers in a new wave of innovation to computer vision (CV) and augmented reality (AR). The ability to perceive an array of environments will unlock the next-generation of augmented reality use cases and further empower the front-line worker like never before.

Understanding the differences between classical (or traditional) and learning computer vision is fundamental to developing applications today and in the near future.

Industrial environments are extremely complex and loaded with intricate procedures in-place to keep mission-critical systems intact. Take the volume of cross-exchanging of very detailed information across Volvo Group’s engine quality assurance line: Each engine requires 40 quality checks, with 200 possible QA variants within engines that can have 4,500 different total engine information variants -- for just one plant.

Getting the right information to the QA operators requires a comprehensive yet seamless digital thread where augmented reality delivers contextualized information in an accurate, timely, and reliable manner; Volvo Group, like many other manufacturers, cannot afford downtime in the hyper-competitive market.

In situations where there this stringent level of granularity and accuracy, the classical approach to computer vision for an augmented reality application is the best path forward.

This essentially is the ‘design-your-own’ CV algorithm in a design and coding environment. An engineer can map native sensor inputs (camera, GPS, accelerometer) to 3D geometries and enable the CV algorithm to be recognized for a specific use case. The AR engineer or experience creator can bring this CV algorithm and specific use case to life in a 3D design authoring environment by aligning these geometries, points, features, and measurements to activate it in context.

Given its custom configurability, it’s popular in heavy-engineering use cases where high fidelity of assets is required. While improving, it’s difficult to accurately measure depth in images using a deep-learning approach, making classical CV still the better option by naturally being more compatible with recognizing intricate 3D structures through algorithm design and sensor choice.

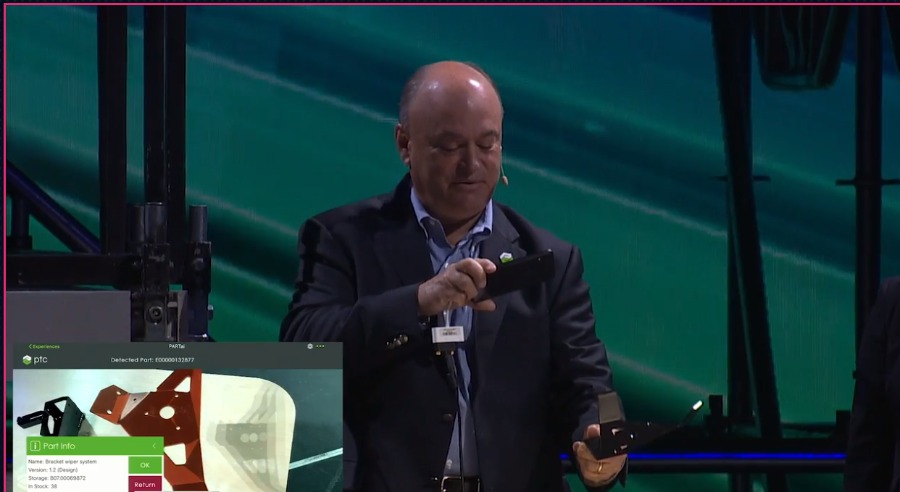

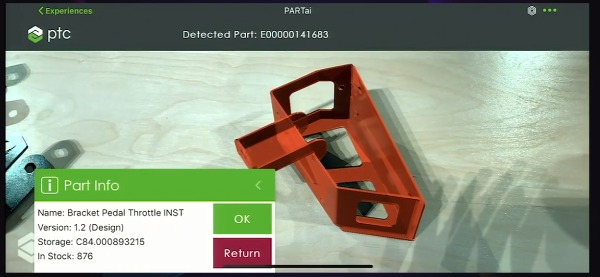

At LiveWorx 2019, PTC CEO Jim Heppelmann presented a demo where the AR experience automatically recognized a spare part. The part didn’t have any bar codes or target markers, yet the computer vision was able to detect the part, its model, and how many were in stock in a matter of seconds.

Artificial intelligence and more specifically deep learning embedded in the augmented reality application enabled this automatic object recognition. The demo took CAD data files of the part to train neural networks in the cloud by feeding it many artificial examples of the part, which created a lightweight inferenced AI model to run on the augmented reality application.

PTC Computer Vision Field Lead, Director John Schavemaker explains further, ‘‘In creating this AI-driven AR demo or with any deep-learning AR application, the inferenced model is only as valuable as the training data, which in this case is artificially created by rendering the 3D CAD model in different positions and orientations and feeds the neural network”.

The importance of training data is common in any industry: Autonomous vehicle’s self-driving algorithms are trained from millions of miles of aggregated images and video driving data or in a medical model fed with millions of patient data points to more accurately preemptively diagnose cancer.

Applying this mindset to the industrial world can have immense impacts. Taking the LiveWorx example into a real-world scenario, a heavy-equipment OEM empowers its dealership technician network with augmented reality embedded with AI. This could help technicians recognize any part on a deployed machine and have the steps to repair/replace it. The shift would drastically improve service KPIs on a global basis.

Quality control and assurance can also be significantly disrupted with AI through AR recognizing defects in industrial products, assets, production lines etc. unnoticeable to the human eye. Deep learning could make factories safer through workers with AR preemptively alerting in unsafe situations.

What is unique in the industrial world is just how unique it is; there are innumerable items, parts, products, assets, machines, and processes spanning operations. To scale an AR application through a classical approach where an engineer must write each unique AR experience for each object across operations is time-consuming and costly. Also finding an image of each unique object, especially if its very detailed (like the Volvo engine), would also be a massive overhaul and subject to inaccuracies.

This positions CAD files as a crucial filter feeder to training neural networks and scaling AR across the enterprise.

”The CAD data is indeed the key: using the CAD data, one can artificially generate the many visual examples to train a deep-learning network using the simple input – desired output paradigm,” says Schavemaker.

CAD data includes all the unique parameters and annotations of an item’s digital definition, enabling AI to quickly recognize it in the real world. Improving the inferenced model at the edge through better training data in the cloud, better optimizes the compute native on the AR device and its impact on recognition. Companies who hold this important definition data source of assets spanning factory floors and remote sites hold the key to unlock next-generation AR with AI use cases.

The chosen CV method will depend on the use case but industrial enterprises will likely use a hybrid of both in different scenarios, as well in the same application. A deep-learning algorithm in AR would be more sufficient to identify an object, while higher-fidelity classical model can more accurately track, map, and measure it. Scalable enterprise AR product portfolios will be fundamental to enabling this necessary model change and broadening AR’s impact with the surrounding environment.

These innovative AR applications will reach their full potential as they weave their way into the digital thread and integrate with complementary technologies. The IIoT will provide real-time telemetry and operational data of the object recognized through AR, as well as provide a bridge for other relevant business systems information.

In the LiveWorx example, it could be details on service parts, warranties, inventory, supplier or delivery information. AR with these development methods will provide the entryway into the industrial ecosystem and the final link for converging physical and digital worlds.

David Immerman is a business analyst on PTC’s Corporate Marketing team providing thought leadership on technologies, trends, markets, and other topics. Previously David was an industry analyst in 451 Research’s Internet of Things channel primarily covering the smart transportation space and automotive technology markets, including fleet telematics, connected cars, and autonomous vehicles. He also spent time researching IoT-enabling technologies and other industry verticals including industrial. Prior to 451 Research, David conducted market research at IDC.

©Copyright 2026. All rights reserved by Modelcam Technologies Private Limited PUNE.